Train any AI, run any LLM – smarter, faster, cheaper

Save big, train fast. With our distributed training platform, you’ll cut costs, save time, and skip the headaches.

Ollama-powered

Onboarding in minutes

Cut costs by 50%

EU secure

No worries, your data stays safely within EU borders, fully aligned with local regulations and privacy standards.

Trusted by

Power Up with Distributed AI Training

Thanks to network-connected clusters, you can enjoy efficient distributed AI training while reducing your costs by up to 50%.

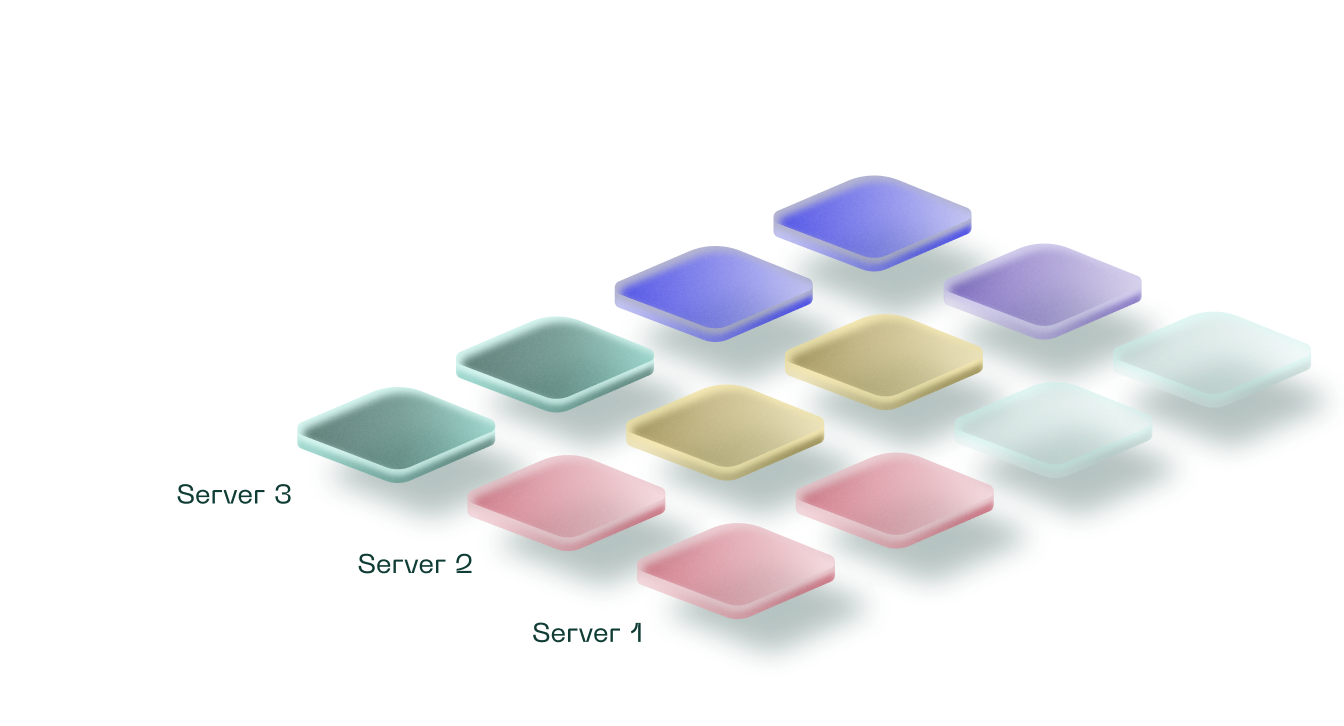

Tech infrastructure

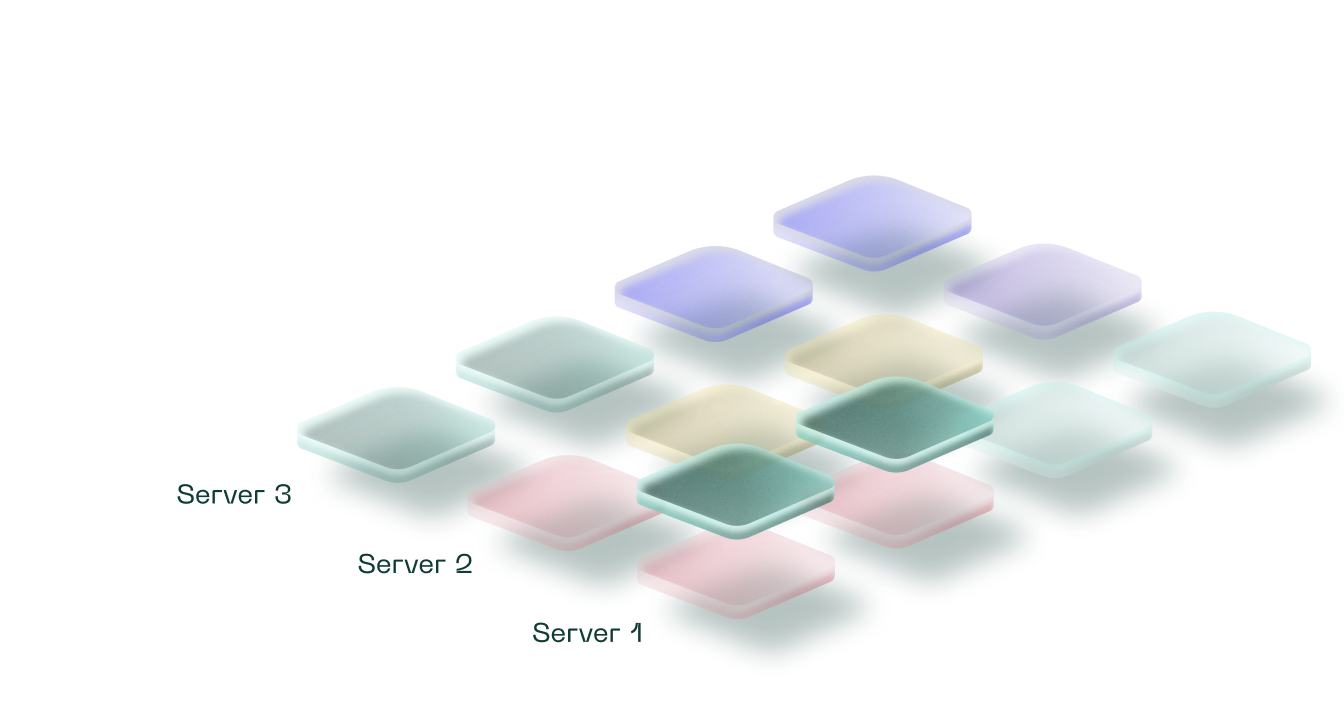

Here's an example of how it works: imagine a setup of servers, each equipped with 4 NVIDIA L40s GPUs.

GPU cluster allocation

GPUs are dynamically allocated into elastic clusters tailored to your workload, all automated by SLURM.

Shared GPU solution

To cut your costs, we've introduced a shared GPU solution, allowing you to share power with other users.

Deep dive into AI models with Ollama hosting

No need for cloud GPUs. No external API calls. We provide Ollama hosting enabling you to run LLMs like DeepSeek, Gemma, Llama, Phi or Mistral to make things easy for you.

Go for it

Choose your VRAM, and we'll handle the rest

Optimize your workflow with our connected enviroments.

Efficient Training

Use a shared GPU cluster for training, saving costs by sharing resources with other users.

Dedicated Inference

Cost Control

Pricing

Big tech oversells, we keep it simple and clear. Get the GPU power you actually need—best value on the market guaranteed.

- Optimized for real-time availability

Onboard in Minutes

What's included

Ollama hosting for LLMs

- Run LLMs like DeepSeek, LLaMA, Mistral, Gemma

- Private and local

- Fast and lightweight

Elastic scalable environment

- Private network

- Shared file system

- SLURM-ready

Ready-to-use images

- Docker support via enroot

- SLURM integration

- Popular frameworks (Modulus, Pytorch, Tensorflow...)

Exclusive infrastructure

- Dedicated GPUs for your needs

- Custom NVIDIA drivers and libraries

Onboard in Minutes

What’s it like to use the Inocloud platform?

Hear what our client, Michal Takáč from DimensionLab, has to say about his experience.

At DimensionLab, InoCloud is our trusted partner for AI simulations. They understand our unique needs and deliver where giants like AWS fall short. Their tailored support is crucial for building our AI-driven simulations.

Great cooperation with dedicated GPU servers and on-hand support—InoCloud truly delivered beyond our expectations. I’m excited to see them grow into the EU’s leading AI computing provider in the near future.

As a medical training provider, we needed robust infrastructure. InoCloud delivered excellent performance and quick setup. Their personalized support and competitive pricing have been invaluable.

As an SME, we required transparent pricing and dedicated support. InoCloud delivered optimal performance and rapid setup. Their competitive pricing and personalized support made all the difference compared to other market options.

InoCloud’s team and platform have been a huge asset to our AI research, fully supporting our SML training needs. Their unbeatable value and exceptional service set them apart from other major players in GPU computing.

InoCloud offers a game-changing solution for developers needing powerful computing capabilities. By using their resources, we significantly enhanced our AI models, giving us a crucial edge in product performance and innovation.

InoCloud’s R&D Initiatives for Energy Efficiency and Sustainability

AI-Driven Energy Solutions

InoCloud develops AI algorithms that optimize energy use in data centers. By analyzing real-time data and predicting renewable energy, these solutions improve efficiency and lower environmental impact.

Pilot Projects Utilizing Renewable Energy

The company launched pilot projects using surplus renewable energy for AI computations, demonstrating the feasibility of sustainable, on-demand power in modern data centers.

Sustainability Research

Ongoing research efforts focus on identifying best sustainability practices. InoCloud ensures environmental considerations are embedded in every aspect of its evolving business model.

Innovative Energy Solutions

InoCloud partners with industry leaders to drive renewable energy adoption. These collaborations enhance capabilities and solidify its role as a key innovator in sustainable data center solutions.

Multi-Tenant GPU Utilization

InoCloud is advancing multi-tenant GPU use, allowing multiple AI tasks on shared GPUs. By improving scheduling and virtualization, it maximizes hardware efficiency and reduces emissions.